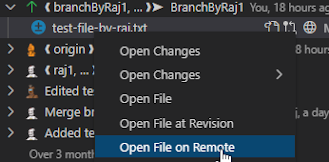

If you wish to enable the shortcuts in GitLens to open remotes, as shown in the following screen shots, here is how I did it.

Screenshots of the shortcuts:

1. CTRL+SHIFT+P to open command palette.

2. Type Preferences: Open Settings (Json). Click on it to open the user settings file.

3. Add the following code, fixing the region values (As a Gist: gitlens.remotes.aws.md (github.com))

"gitlens.remotes": [{

"regex": "https:\\/\\/(git-codecommit\\.us-west-2\\.amazonaws\\.com)\\/v1/repos\\/(.+)",

"type": "Custom",

"name": "AWS Code Commit",

"protocol": "https",

"urls": {

"repository": "https://us-west-2.console.aws.amazon.com/codesuite/codecommit/repositories/${repo}/browse?region=us-west-2",

"branches": "https://us-west-2.console.aws.amazon.com/codesuite/codecommit/repositories/${repo}/branches?region=us-west-2",

"branch": "https://us-west-2.console.aws.amazon.com/codesuite/codecommit/repositories/${repo}/browse/refs/heads/${branch}?region=us-west-2", //

"commit": "https://us-west-2.console.aws.amazon.com/codesuite/codecommit/repositories/${repo}/commit/${id}?region=us-west-2",

"file": "https://us-west-2.console.aws.amazon.com/codesuite/codecommit/repositories/${repo}/browse/refs/heads/${branch}/--/${file}?region=us-west-2&lines=${line}",

"fileInBranch": "https://us-west-2.console.aws.amazon.com/codesuite/codecommit/repositories/${repo}/browse/refs/heads/${branch}/--/${file}?region=us-west-2&lines=${line}",

"fileInCommit": "https://us-west-2.console.aws.amazon.com/codesuite/codecommit/repositories/${repo}/browse/${id}/--/${file}?region=us-west-2&lines=${line}",

"fileLine": "{line}",

"fileRange": "${start}-${end}"

}

}]

Reference: https://github.com/Axosoft/vscode-gitlens#remote-provider-integration-settings-