Came across this slightly old series of posts by the developer division at Microsoft that shows how they used TFS to manage their software development (specifically of Orcas).

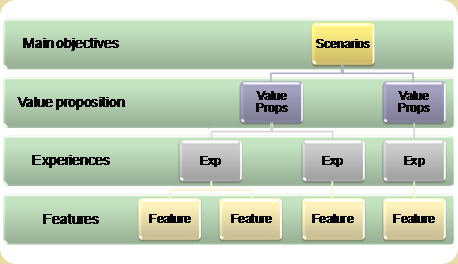

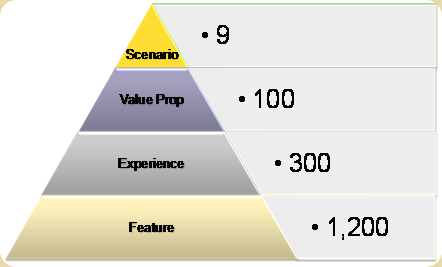

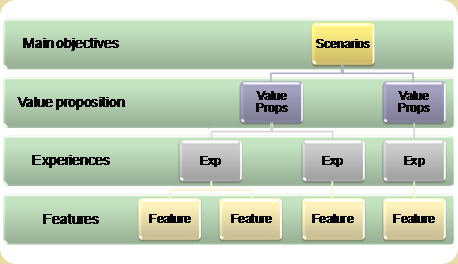

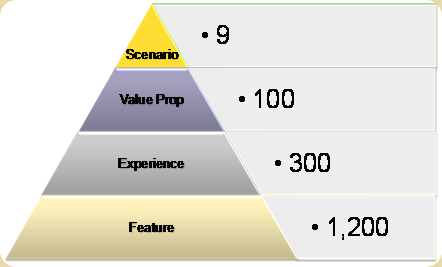

The process involved the planning stages (scenario, value proposition, experience) and the implementation stage (features). The experiences most directly relate to epics. The goal was to break down epics (experiences) into features that could be easily implemented within an iteration (or two). One of the interesting things to note is that how a handful of scenarios can break-down into 1000s of scenarios. (for every epic (experience) there were approximately 4 features).

Each feature is implemented by a “feature crew”

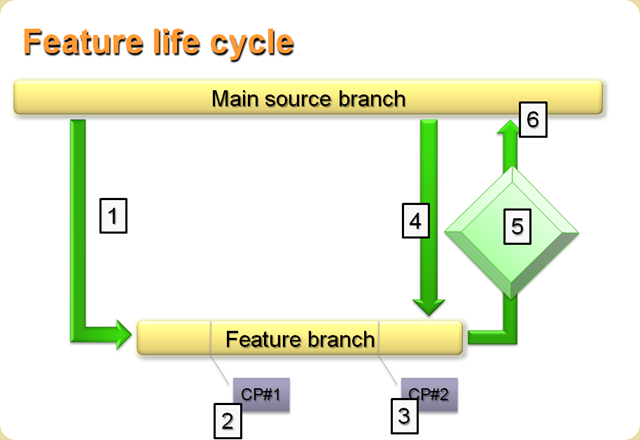

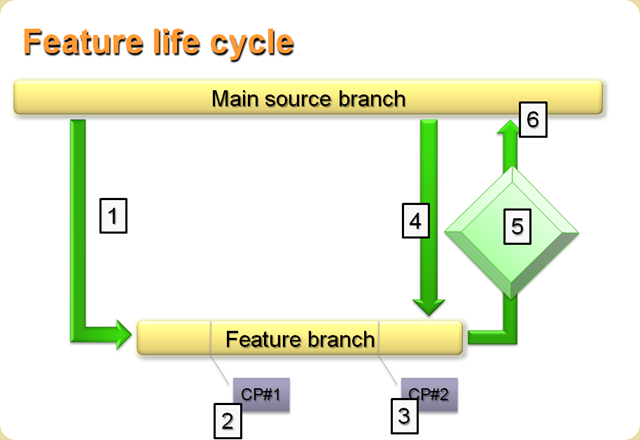

Development occurs of a branch pulled from the trunk for each feature (Chapter 2)

Chapter 2 is extremely interesting as it goes into the lifecycle described by the above diagram.

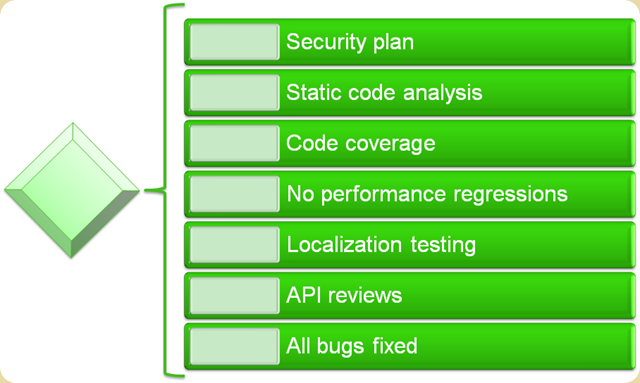

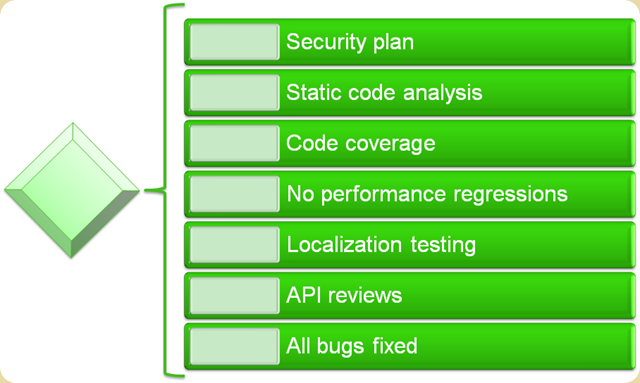

Some of the interesting quality gate categories used were:

Chapter 4 discusses how features were assigned “ball-park” estimates. Estimates for all features were aggregated into a single excel work-book which was then used to determine the order in which features were to be implemented.

In chapter 5, the post discusses about how TFS allowed the different feature teams to use whatever development model that suited them (Agile, Waterfall, etc). Communication of the state of the feature implementation was done via a single tab in TFS that needed to be updated once per week. The tab itself is minimal in the amount of information it collects. (looks like the dev-div used a custom template and the post describes the Progress tab in detail). There were 4 dates that were key during a feature implementation. All dates did not have to be committed to at the beginning of the feature implementation project. Instead the process followed was:

An interesting thing to note in this post is the culture shift that occurred by the transparency that occurred with data that was available up and down the chain regarding project status:

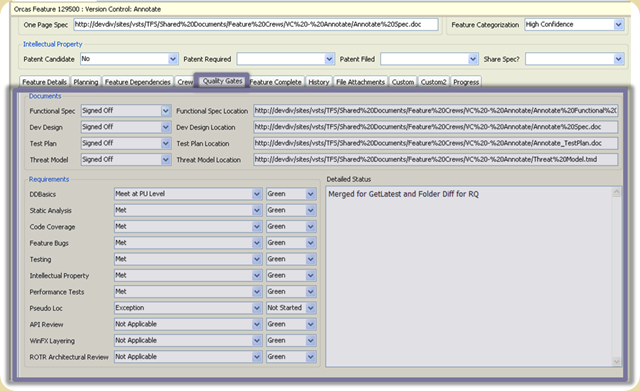

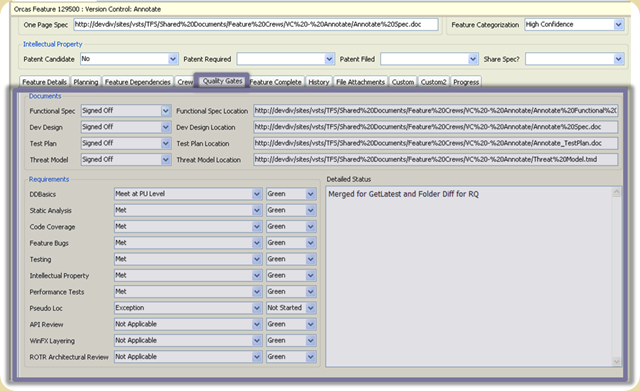

Quality gate tracking is discussed in Chapter 8. The tracking itself is done via the Quality Gates tab in TFS.

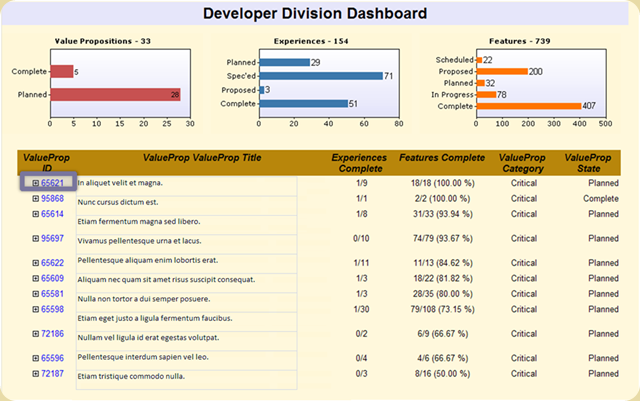

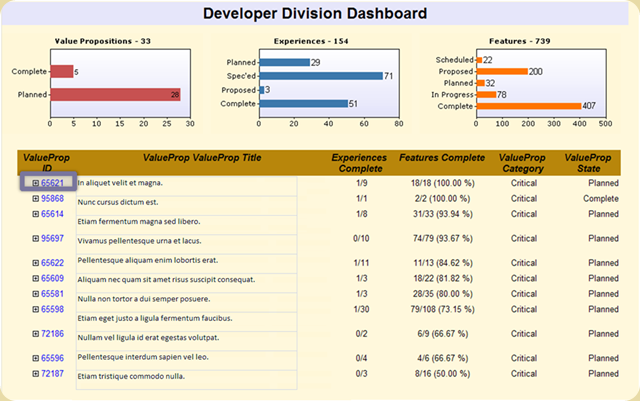

The final chapter (Chapter 9) shows the reports that was used by the top management to keep track of the progress that was being made across scenarios, value proposition, experiences and features. (basically a drill-down report).

The entire series is an extremely good read and I strongly suggest that you read them (as what I have done here is to only summarize the series, mainly so that I would have them all in one location). Thanks to Greg Boer for doing an excellent job of documenting how TFS was being used within the dev-division at Microsoft.

More info:

Ever Wonder How We Build Software at Microsoft? Now You Can Read All About It!

- Our process

- Feature Crews

- Planning a release

- Tracking Progress

- Tracking multiple projects

- Tracking Risk

- Tracking quality gates

- Transparency in reporting

The process involved the planning stages (scenario, value proposition, experience) and the implementation stage (features). The experiences most directly relate to epics. The goal was to break down epics (experiences) into features that could be easily implemented within an iteration (or two). One of the interesting things to note is that how a handful of scenarios can break-down into 1000s of scenarios. (for every epic (experience) there were approximately 4 features).

Each feature is implemented by a “feature crew”

Development occurs of a branch pulled from the trunk for each feature (Chapter 2)

Chapter 2 is extremely interesting as it goes into the lifecycle described by the above diagram.

- Branch code.

- Checkpoint 1: demo design and get feedback

- Checkpoint 2: demo completed functionality

- Merge code from main into the branch

- Quality gates: before check-in to main branch can be done, a set of quality gates need to be satisfied

- Code is checked in. Because of the quality gates, the quality of the main branch is kept high

Some of the interesting quality gate categories used were:

Chapter 4 discusses how features were assigned “ball-park” estimates. Estimates for all features were aggregated into a single excel work-book which was then used to determine the order in which features were to be implemented.

In chapter 5, the post discusses about how TFS allowed the different feature teams to use whatever development model that suited them (Agile, Waterfall, etc). Communication of the state of the feature implementation was done via a single tab in TFS that needed to be updated once per week. The tab itself is minimal in the amount of information it collects. (looks like the dev-div used a custom template and the post describes the Progress tab in detail). There were 4 dates that were key during a feature implementation. All dates did not have to be committed to at the beginning of the feature implementation project. Instead the process followed was:

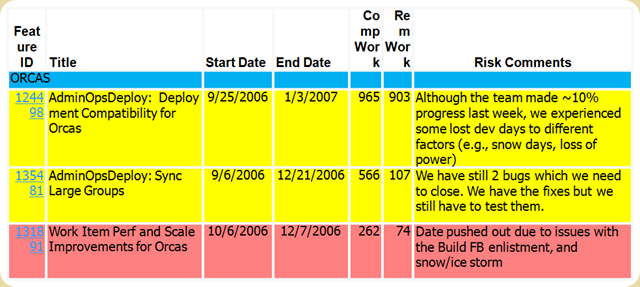

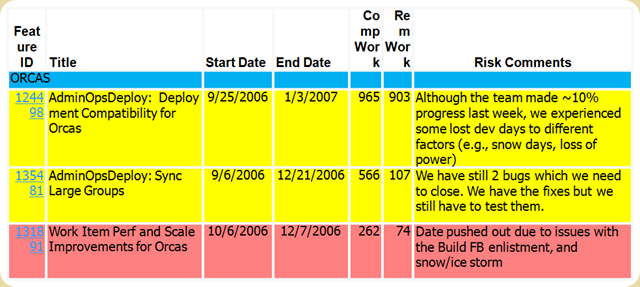

At the start of the feature crew, the team needed to commit to a checkpoint 1 date, and estimate the checkpoint 2 and finish dates. At checkpoint 1, the team would commit to a checkpoint 2 date, and estimate the finish date. At checkpoint 2, they would commit to a finish date.Another interesting item tracked was the risk. This was tracked via 3 codes: green, yellow and red

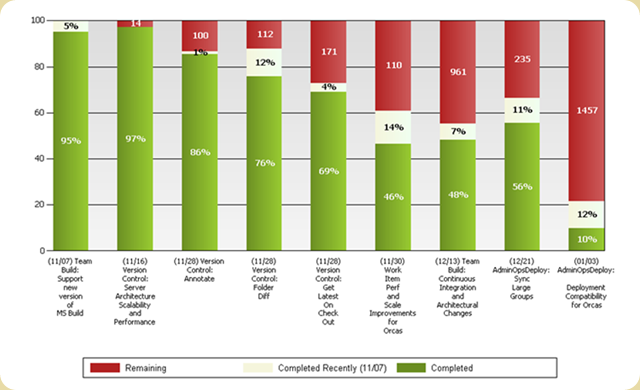

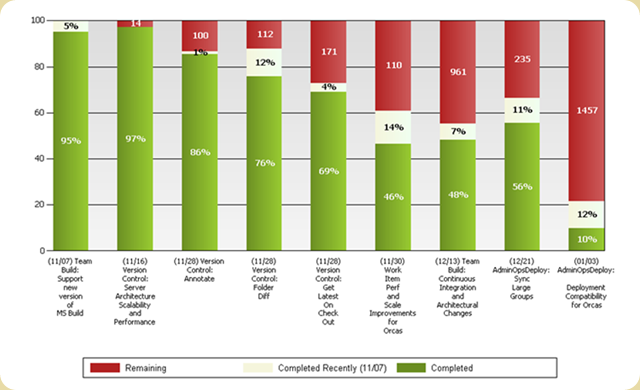

Green = We will meet the datesTracking of multiple projects (chapter 6) was done via reports generated of off TFS. This report was used in a weekly meeting of feature team managers, the program manager and product manager. The report presented all features that were currently in flight. This was done using a red, yellow, green report that showed:

Yellow = We are at risk

Red = We will miss the dates

- Green - The percentage of work completed, when compared to the entire project

- Yellow - The percentage of work completed in the last reporting period, typically 1 week.

- Red - The number of hours of work remaining.

- You'll also note that the date the feature crew is scheduled to complete is included with the name of the feature crew.

An interesting thing to note in this post is the culture shift that occurred by the transparency that occurred with data that was available up and down the chain regarding project status:

In this culture of openness, management had to let go of "holding people to their original dates, no matter what" and those on the feature crew had to let go of "presenting the project status in the best possible light" or "hiding bad news".Chapter 7 discusses the risk information that is entered into the Progress tab that was discussed earlier in chapter 5. The risk information is pulled into an excel report and all those teams that are not green need to explain why the progress tab as well as in a weekly meeting as to why the team maybe slipping. Again an interesting part about this post is that the division was making the mind shift to transparency, where it was better to let the team know early than latter that you were going to be late. The team itself realized that sometimes slips occurred because of issues that were out of the control of the team.

Quality gate tracking is discussed in Chapter 8. The tracking itself is done via the Quality Gates tab in TFS.

The final chapter (Chapter 9) shows the reports that was used by the top management to keep track of the progress that was being made across scenarios, value proposition, experiences and features. (basically a drill-down report).

The entire series is an extremely good read and I strongly suggest that you read them (as what I have done here is to only summarize the series, mainly so that I would have them all in one location). Thanks to Greg Boer for doing an excellent job of documenting how TFS was being used within the dev-division at Microsoft.

More info:

Ever Wonder How We Build Software at Microsoft? Now You Can Read All About It!

No comments:

Post a Comment